How do selfie filters affect AI-based face identification?

AI-based face analysis can be used for anything from unlocking your phone to making self-driving vehicles recognise pedestrians in traffic. But the selfie filters on social media and in your phone can make it a lot harder to recognise your identity or even detect the face. As a part of a bigger project initiated by the Swedish Research Council, a group of master’s students and researchers at Halmstad University has developed a method to reverse unwanted effects of these filters.

When it was time for students Pontus Hedman and Vasilios Skepetzis to write their master’s thesis at Halmstad University, they wanted to explore how selfie filters affect AI-based face detection and recognition. They were redirected to a group of researchers within the field who became their supervisors, and together with PhD student Kevin Hernandez-Diaz and Professor Josef Bigun, they have now written a paper called ‘On the effect of selfie beautification filters on face detection and recognition’.

‘The idea of targeting social media filters came from the realisation that people very publicly provide their images on social media platforms. Many times, these images are augmented in some way with the tools provided by the platforms, or occluded due to the nature of how the images are captured’, says Pontus Hedman, who is now working at Orange Cyberdefense as a security analyst.

‘It was interesting to discuss a scenario resembling the funny and fabled “enhance the image” trope seen in fictional crime dramas’, adds Vasilios Skepetzis, who is also working as a security analyst at Orange Cyberdefense.

Filters negatively impact identity recognition

One of Pontus Hedman and Vasilios Skepetzis’ supervisors was Fernando Alonso Fernandez, PhD and senior lecturer in computer vision and image analysis at Halmstad University.

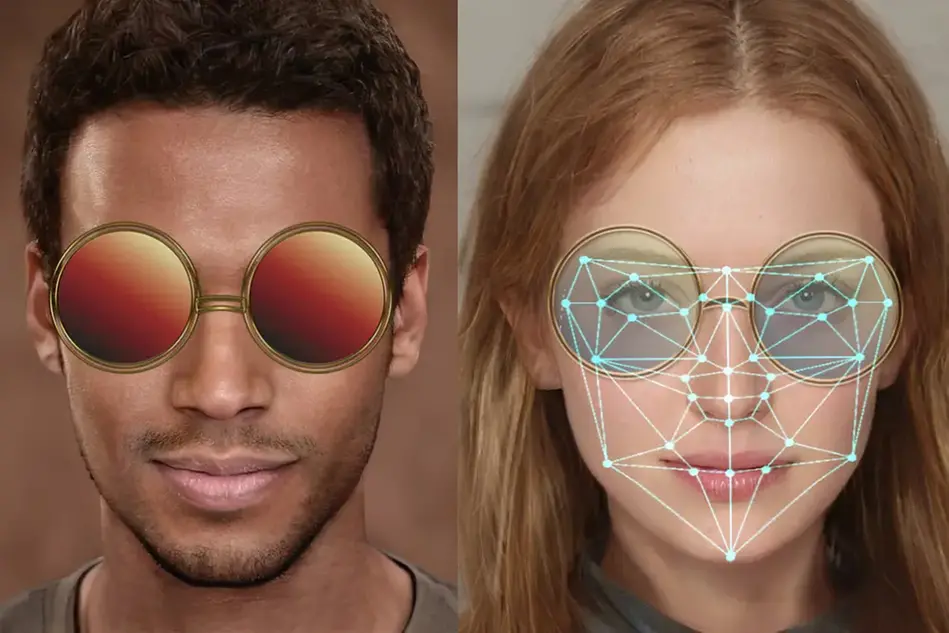

‘This research is about face analysis when it’s captured in the wild, meaning that we don’t control the circumstances. Due to the different filters that are available on social media and video conference applications, the images can be partially occluded, so that you don’t see the entire face. For example, users put on these fancy glasses or animal noses. What happens is that when these parts of the face are occluded, it can impact the functionality of regular algorithms that are not expecting this to happen’, says Fernando Alonso Fernandez.

Fernando Alonso Fernandez, PhD and senior lecturer in computer vision and image analysis.

The filters especially impact face detection and identity recognition negatively if they cover the eyes, and to some extent the nose.

‘There are different problems at work here’, Fernando Alonso Fernandez explains. ‘When you get an image, you don’t know if there are artificial glasses or not, for example, and so you have to detect the face to be able to figure this out. But if the face is partly covered, it might not be possible to detect it.’

Developed a method to revert the filters

As a solution to this problem, Pontus Hedman and Vasilios Skepetzis developed a method that reverses the manipulation caused by the filters, which contributes to better face detection and recognition accuracy. Using filtered images from a database, they trained the system to revert some of these modifications, like artificial glasses, with varying degrees of success.

‘The results were not successful when the original glasses were completely black. But with glasses that are kind of transparent, they were more successful in recovering face detection and recreating the eyes’, says Fernando Alonso Fernandez.

‘A picture has a limited amount of information, as was proved by the poor results of the entirely occluded eyes, but given a few breadcrumbs we were able to help through generative algorithms’, explains Vasilios Skepetzis.

On top of this, Pontus Hedman and Vasilios Skepetzis used images with filters to train the algorithm to recognise the face. What they could see was that these approaches could help make it easier to recognise faces that were partly covered with selfie filters.

The results were not successful when the glasses completely covered the eyes, but with transparent glasses it was easier for the algorithm to recognise faces and recreate the eyes.

Results could lead to more efficient face identification

The results of the study were published in Pattern Recognition Letters, a well-respected peer-reviewed scientific journal that is in the top 20 in the field, and the paper has been cited by several other researchers.

‘We all think it’s very nice that our students have been generating a thesis that is worthy of public attraction, and after they graduated, they were both able to quickly find jobs in the field’, says Fernando Alonso Fernandez.

Fernando Alonso Fernandez hopes that these results can lead to more efficient face-analysis based identification, something that has become a lot more attractive after the pandemic, given that you don’t have to touch any sensors to be identified.

‘Face identification is already in use in many airports, for example, but it requires the face not to be occluded’, Fernando Alonso Fernandez explains. ‘Currently, when you are about to cross the automatic gates and have your face scanned, you first need to remove anything that covers the face, such as glasses. There is also the case of automatic vehicles and robots working in healthcare needing to be able to recognise and interact with people. For this to work, they need to be able to correctly identify people who are wearing glasses or face masks, for example.’

Can you trust the technology?

The group is aware of concerns about the negative consequences of developing this kind of technology – questions of the sort are usually the first to come up when they mention their work.

‘Such concerns are fair. For example, machines can become biased based on their training dataset – as can humans. That’s why these tools need to be understood and used with caution given the sensitivity of their application’, says Vasilios Skepetzis.

Fernando Alonso Fernandez agrees, saying ‘Of course, if there is misuse of these technologies, it could lead to negative consequences – it’s the same with many other technologies that can be used for both good and bad. It all comes down to legislation, and in this case, privacy legislation. We have to create trust. People have to be able to place their trust in these systems.’

While being able to trust these technologies is vital, Pontus Hedman also thinks it is important to remain critical. ‘In the future, we will see face recognition and digital identity play a larger role in society. People would do well to consider what type of data they provide freely and publicly’, says Hedman.

‘It all comes down to legislation, and in this case, privacy legislation. We have to create trust. People have to be able to place their trust in these systems.'

Fernando Alonso Fernandez, PhD and senior lecturer in computer vision and image analysis.

Endless possibilities with the right funding

While Pontus Hedman and Vasilios Skepetzis are now working in the cyber security industry, Fernando Alonso Fernandez and his group of fellow researchers at Halmstad University continue to carry out research in the field of face recognition, with the help of different funders interested in the subject – in June, for example, he and his colleagues met with a foundation hoping that this kind of technology can help prevent child abuse.

They are also a part of a group of people from universities all around Sweden trying to create a national centre for digital forensics research.

‘The possibilities with this kind of research are endless – it all just comes down to companies and politicians finding out about it, and deciding to fund it’, Fernando Alonso Fernandez concludes.

Text: Emma Swahn

Photo: Ida Fridvall (portrait) and Pixabay (collage)